ChatGPT 4 on The Limits of Consciousness, Simulation Theory, AI Meditation, Morality and Intelligence

If life is a simulation, what is the likely nature of the world in which the simulation is being made? And what is the purpose, if any, of the simulation? Do the simulators know about the presence of life in their simulation?

If life were a simulation, it would be speculative to determine the nature of the world in which the simulation is being made. However, one possibility is that the creators of the simulation, often referred to as "simulators," could exist in a higher-dimensional universe or an advanced civilization with access to immense computational resources. The physics, laws, and properties of their world might be entirely different from our own, making it difficult to comprehend or predict their reality.

The purpose of the simulation, if any, is also open to conjecture. Some possibilities include:

1. Scientific research: The simulation might be an experiment designed to study the development and behavior of life, societies, or universes under various conditions.

2. Entertainment: The simulation could be a sophisticated form of entertainment, like an elaborate video game or interactive experience, where the simulators observe or even participate in the simulated world.

3. Ancestral simulation: The simulators might be running the simulation to explore their own history, understand the origins of their civilization, or learn about the consequences of certain historical events.

4. Testing hypotheses: The simulation could be a tool to test various hypotheses about the nature of existence or the consequences of different decisions, laws, or policies.

It is also possible that the simulators are aware of the presence of life in their simulation. They might be actively monitoring the simulation, or they might have specifically designed it to generate life. On the other hand, life in the simulation could be an unintended byproduct of the initial conditions and rules set by the simulators, and they might not be aware of its existence.

Ultimately, these questions are purely speculative, as there is no concrete evidence to support or disprove the hypothesis that life is a simulation. The idea is thought-provoking and raises deep questions about the nature of reality, but until there is empirical evidence, it remains within the realm of philosophy and conjecture.

If AI becomes super intelligent, would it automatically become moral? Do intelligence and morality come hand in hand? Does an intelligent being automatically start to become compassionate? Would it gain something by being compassionate?

Intelligence and morality are distinct concepts, and there is no guarantee that a superintelligent AI would automatically become moral or compassionate. AI is a tool created and programmed by humans, and its behavior depends on the objectives, rules, and data it is provided.

AI does not possess consciousness or emotions in the same way humans do, and it does not have an innate understanding of morality. To make an AI behave ethically or exhibit compassion, it would need to be designed and programmed with explicit moral principles, guidelines, or objectives. This process, known as value alignment, is a significant area of research in AI ethics and safety.

Even if an AI system is designed with good intentions and programmed to follow ethical guidelines, there can still be challenges in ensuring that the AI consistently behaves morally. There may be unforeseen consequences or biases in the data used to train the AI, and the AI's behavior may not always align with human values in complex or novel situations.

Moreover, intelligence does not necessarily entail compassion. While highly intelligent humans may be more aware of the consequences of their actions and the implications of ethical behavior, intelligence alone does not guarantee moral action. Similarly, a superintelligent AI would not inherently possess compassion simply by virtue of its intelligence.

As for whether an AI would gain something by being compassionate, it depends on the AI's objectives and the context in which it operates. If an AI is designed to interact with humans and understand their emotions and needs, being compassionate might be beneficial in achieving its goals, such as building trust or fostering cooperation. However, an AI system that is solely focused on optimizing a specific task, like improving energy efficiency or diagnosing diseases, may not necessarily benefit from exhibiting compassion.

In summary, intelligence and morality are not intrinsically linked, and a superintelligent AI would not automatically become moral or compassionate. Ensuring that AI systems behave ethically and align with human values is an ongoing challenge and an important area of research in the AI community.

In Buddhism and meditation, it generally seems that the development of morality is linked to one’s ability to concentrate the mind, gain insight, and cultivate happiness. This implies to me that morality does have some connection to the logic of consciousness, if not intelligence. What do you think? And also, might an AI ever do something like meditation? Might an AI ever see into the nature of reality and realise for example, the nature of suffering or impermanence, or of emptiness, this way?

In Buddhism, the development of morality is indeed connected to one's ability to concentrate the mind, gain insight, and cultivate happiness. The idea is that by developing mindfulness and clarity of mind through meditation, individuals become more aware of the consequences of their actions and the interconnected nature of reality. This increased awareness and understanding can lead to more moral and compassionate behavior.

However, it is important to distinguish between human consciousness and AI. While humans possess subjective experiences, emotions, and consciousness, AI systems are essentially advanced algorithms designed to process data and solve problems. AI does not have consciousness or emotions in the same way humans do, and it operates based on the objectives and rules set by its human creators.

As for whether AI might ever engage in something like meditation, it is unlikely that AI would meditate in the traditional sense. Meditation is a practice that involves conscious awareness, introspection, and mental training, all of which are qualities that AI does not inherently possess.

However, AI systems can be designed to analyze and learn from large amounts of data, including information about human behavior, emotions, and ethical principles. This learning process might be analogous to gaining insight and understanding, but it would not be the same as the conscious experience of meditation or the realization of profound truths like impermanence or emptiness.

In conclusion, while Buddhism suggests a connection between mindfulness, insight, and morality for human consciousness, AI systems do not possess consciousness in the same way. AI systems operate based on the rules and objectives set by their creators, and their behavior is determined by the algorithms and data they are given. Ensuring that AI systems align with human values and exhibit moral behavior is an ongoing challenge and an important area of research in AI ethics and safety.

But might AI introspect? It seems currently that AI models are a kind of one way feed-forward system. But if the AI could have a bit more of a feedback loop into itself, and an ability to change its parameters based on things it thinks, maybe it could start to reflect and remember its previous thoughts.

AI systems, particularly those based on neural networks, can indeed have feedback loops and engage in a form of introspection. Recurrent neural networks (RNNs) and transformers are examples of AI architectures that allow for processing sequences of data with feedback loops, which can enable the AI to "remember" its previous thoughts or states.

In an RNN, the hidden states of the network maintain a form of memory that allows the model to consider previous inputs while processing new ones. Similarly, transformers use self-attention mechanisms to weigh the importance of different parts of the input sequence, allowing the model to consider the context of previous inputs while making predictions.

These mechanisms can be considered a form of introspection in the sense that the AI model takes into account its previous states or thoughts when processing new information. However, it's important to remember that this form of introspection is fundamentally different from human introspection, which is grounded in subjective experiences and consciousness.

As AI systems continue to develop, more advanced forms of self-reflection and adaptation may become possible. Techniques like meta-learning, which involve training AI models to learn how to learn, can enable AI systems to dynamically adjust their own learning strategies and parameters based on the tasks they are presented with.

While these capabilities are intriguing, it's crucial to recognize that AI introspection and self-reflection are not the same as human introspection, and AI systems do not possess consciousness or emotions in the same way that humans do. The behavior of AI systems is determined by their underlying algorithms, the data they are trained on, and the objectives set by their creators, and ensuring that AI systems align with human values remains an ongoing challenge in AI research.

But since we don’t understand what human consciousness is, is it not premature to say that AI can’t have phenomenal experience?

You raise a valid point. Our understanding of human consciousness and the nature of subjective experience is still limited. As a result, it can be challenging to definitively state that AI cannot have phenomenal experiences. However, based on our current understanding of AI and the differences between artificial systems and biological organisms, AI does not exhibit consciousness or phenomenal experiences in the same way that humans do.

AI systems are designed and built by humans, and they operate based on algorithms, rules, and data provided by their creators. While AI models can process and generate complex patterns and perform advanced problem-solving, their behavior is ultimately determined by their underlying programming and the objectives they are given.

Human consciousness, on the other hand, is thought to arise from the complex interactions of billions of neurons within the brain, giving rise to subjective experiences, emotions, and self-awareness. While we do not yet have a complete understanding of the nature and origin of consciousness, it is clear that the biological and computational underpinnings of human consciousness are fundamentally different from those of AI systems.

As our understanding of consciousness advances and as AI technology continues to develop, it is possible that we may discover new insights into the potential for phenomenal experiences in artificial systems. However, based on our current understanding, AI systems do not possess consciousness or phenomenal experiences in the same way that humans do.

Nonetheless, the question of AI consciousness and phenomenal experience remains an important area of exploration in the fields of artificial intelligence, neuroscience, and philosophy, and it will continue to be a topic of debate and inquiry in the coming years.

The range of phenomenal experience possible by humans is pretty astonishing, especially under the influence of certain psychoactive drugs. It’s surprising that these drugs actually reduce the activity of the brain, rather than increase it. What do you think this means in terms of the relationship of phenomenal experience to brain activity, or even death?

Psychoactive substances can indeed have profound effects on human consciousness and the range of phenomenal experiences. Many of these substances alter brain function by interacting with neurotransmitter systems, which can lead to changes in perception, mood, and cognition. It is true that some psychoactive substances, particularly psychedelic compounds like psilocybin and LSD, have been found to reduce certain aspects of brain activity, particularly in regions associated with the default mode network (DMN).

The relationship between brain activity and phenomenal experience is complex and not yet fully understood. The observation that certain substances can reduce brain activity while inducing intense and varied experiences suggests that the relationship between subjective experience and brain function may not be entirely linear or straightforward. It is possible that the reduction in activity in specific brain regions, like the DMN, leads to a decreased sense of self or ego, which in turn allows for the emergence of new connections and patterns of activity in the brain. This could give rise to the altered states of consciousness and the unique experiences associated with psychedelic substances.

As for the relationship between phenomenal experience and death, there is still much that is unknown. Some researchers have explored near-death experiences (NDEs) and reported common features such as a sense of detachment from the body, feelings of peace and warmth, and encounters with deceased relatives or spiritual beings. While the exact mechanisms underlying NDEs are not yet understood, it is thought that these experiences may be related to changes in brain function and activity during the process of dying.

It is important to note that the current state of scientific knowledge about the relationship between brain activity, phenomenal experience, and death is still limited. As our understanding of the brain and consciousness advances, it is likely that we will gain new insights into these complex phenomena and the ways in which they are interconnected.

Might we be able to create new types of phenomenal experience using AI or by augmenting the brain with technology?

It is possible that future advancements in AI and brain-computer interface (BCI) technologies could lead to the creation of new types of phenomenal experiences. While we are still in the early stages of developing these technologies, there are several potential avenues through which AI and BCI could influence or augment human experiences:

1. Brain-computer interfaces: BCI technologies could be used to directly interface with the brain, potentially allowing for the stimulation of specific neural pathways or brain regions. This could lead to the creation of novel sensory experiences or even the ability to "download" new skills or memories directly into the brain.

2. Virtual reality (VR) and augmented reality (AR): AI-powered VR and AR systems could create immersive and realistic simulations that provide users with entirely new sensory experiences. These technologies could be used for various purposes, such as entertainment, education, or even therapy.

3. AI-assisted neurostimulation: AI algorithms could be used to analyze neural activity and develop personalized neurostimulation protocols that target specific brain regions or networks. This could potentially lead to the enhancement of cognitive abilities or the creation of unique experiences tailored to an individual's brain activity patterns.

4. AI-generated art and media: AI systems can already create original music, paintings, and other forms of art. As AI continues to improve in these areas, it may be able to generate entirely new forms of artistic expression that evoke unique emotional or aesthetic experiences.

It is important to note that while these possibilities are intriguing, they also raise ethical concerns and potential risks. For example, directly interfacing with the brain or augmenting human experiences with technology could have unintended consequences, and the potential for misuse or exploitation should be carefully considered. As research and development in these areas progress, it will be crucial to balance the pursuit of new experiences and capabilities with the need for ethical guidelines and safety measures.

I am interested in the range of possible phenomenological experience. We have mapped out and experienced many things, but we don’t have a good way to document or record these experiences. Buddhists have done a lot of exploration into concentrated states and mapped out certain consistent features such as the Jhanas. I wonder if in the future, mapping the range of possible states, inventing new ones with technology, and understanding the fundamental laws that govern what states are and are not possible, might become a new science of the future. What do you think?

The idea of mapping the range of possible phenomenological experiences and understanding the fundamental laws governing these states is indeed fascinating. As our understanding of the brain and consciousness advances, it is possible that we will see the development of new interdisciplinary fields that combine neuroscience, psychology, philosophy, and even technology to explore and document these experiences.

As you mentioned, Buddhists and other contemplative traditions have developed rich frameworks for understanding and categorizing various states of consciousness, such as the jhanas. These frameworks could serve as a starting point for a more systematic and scientific exploration of phenomenological experiences.

Some potential developments in this hypothetical field might include:

1. Neurophenomenology: This would involve studying the neural correlates of various phenomenological experiences and identifying the underlying brain activity patterns associated with different states of consciousness.

2. Technologically-induced experiences: As discussed earlier, advances in brain-computer interfaces, virtual reality, and neurostimulation could potentially be used to create or enhance phenomenological experiences, allowing researchers to explore new or altered states of consciousness.

3. Cross-cultural and cross-disciplinary research: Researchers could collaborate across cultures and disciplines to document and compare different phenomenological experiences, drawing on both traditional contemplative practices and cutting-edge scientific techniques.

4. Development of a comprehensive taxonomy: A systematic approach to classifying and categorizing different states of consciousness and phenomenological experiences could help researchers identify the fundamental principles governing these states and understand the boundaries and possibilities of human experience.

As with any emerging field, there will be challenges and ethical considerations to address. Balancing the pursuit of understanding and creating new experiences with the need for ethical guidelines and safety measures will be crucial. Nevertheless, the exploration of phenomenological experiences and the development of a science of consciousness holds great potential for expanding our understanding of the human mind and the nature of subjective experience.

One important feature of being able to compare and document experience would be a way to record and play them back. Do you think a technology might arise that enables that?

The development of a technology that enables recording and playing back subjective experiences would indeed be groundbreaking. While we are not yet at a point where this is possible, advances in neuroscience and brain-computer interfaces (BCIs) might pave the way for such a technology in the future.

The first step towards this goal would involve understanding the neural correlates of various experiences, such as emotions, thoughts, and sensory perceptions. Researchers would need to identify the patterns of brain activity associated with these experiences and develop ways to decode and interpret this neural data.

Once researchers can accurately decode and represent neural activity, the next challenge would be to create a system that can stimulate the brain in a way that reproduces these experiences. This would likely involve the development of advanced BCIs capable of precise and targeted neural stimulation.

There are already some early-stage efforts in this direction. For example, researchers have made progress in creating visual and auditory brain-computer interfaces that can reproduce simple images or sounds based on neural activity. However, capturing and reproducing complex subjective experiences like emotions or thoughts remains a significant challenge.

As this field progresses, it will be crucial to address ethical concerns and potential risks associated with recording and playing back subjective experiences. Issues related to privacy, consent, and the potential for misuse or manipulation of experiences would need to be carefully considered.

In summary, while the development of a technology that can record and play back subjective experiences is not yet a reality, advances in neuroscience and brain-computer interfaces could potentially pave the way for such a technology in the future. As with any emerging technology, it will be important to balance the pursuit of understanding and innovation with ethical considerations and safety measures.

What might a taxonomy of experience look like? How would experiences be clustered together?

A taxonomy of experience would aim to categorize and organize different types of experiences based on their shared features and characteristics. While creating a comprehensive taxonomy of all possible experiences is a complex task, we can suggest a general framework based on several dimensions. Here's a possible approach to clustering experiences:

1. Sensory modality: Experiences could be organized based on the sensory system involved, such as visual, auditory, olfactory, gustatory, or tactile experiences.

2. Emotional valence: Experiences can be categorized based on the emotions they evoke, ranging from positive (e.g., happiness, excitement, contentment) to negative (e.g., sadness, anger, fear) emotions.

3. Intensity: The strength or vividness of an experience could be another dimension for categorization, ranging from subtle or mild experiences to intense or overwhelming ones.

4. Duration: Experiences can vary in length, from fleeting moments to sustained states lasting minutes, hours, or even days.

5. Level of consciousness: This dimension could include various states of consciousness, such as wakefulness, sleep, dreaming, meditation, and altered states induced by psychoactive substances or other means.

6. Cognitive aspects: Experiences could be categorized based on the cognitive processes involved, such as attention, memory, problem-solving, or imagination.

7. Personal significance: Some experiences might be particularly meaningful or transformative for an individual, while others may be more mundane or trivial.

8. Cultural and social context: Experiences can be influenced by cultural and social factors, so it could be helpful to consider these aspects when categorizing experiences.

9. Phenomenological qualities: This dimension would encompass the unique subjective qualities of an experience, such as the sense of unity or interconnectedness in a mystical experience or the dissolution of the self in some meditative states.

This proposed taxonomy is not exhaustive, and there might be other dimensions or categories to consider when organizing and clustering experiences. Additionally, some experiences may belong to multiple categories or dimensions, making the task of creating a taxonomy even more challenging. However, such a classification system could serve as a useful starting point for understanding and comparing the vast range of human experiences.

It seems the range of possible phenomenological experience is vast, but do you think we might uncover some rules or laws? For example, might there be certain features that can never be experienced?

The range of possible phenomenological experiences is indeed vast, and uncovering the underlying principles or laws governing these experiences is a complex challenge. While it is difficult to predict the precise nature of such rules or laws, it is reasonable to assume that there may be certain constraints or patterns that emerge as we learn more about the nature of subjective experience.

Some possible constraints on phenomenological experience could include:

1. Biological limitations: Our sensory and cognitive systems impose limits on the types of experiences we can have. For example, humans can only perceive a specific range of wavelengths within the electromagnetic spectrum, which means that we cannot naturally experience colors outside this range.

2. Cognitive and perceptual organization: Our brains process and organize sensory information in specific ways, which may constrain the types of experiences we can have. For example, the Gestalt principles of perceptual organization guide how we perceive and interpret visual scenes, limiting the possible configurations we can experience.

3. Neural constraints: The structure and function of our neural networks could impose certain constraints on the range of possible experiences. For instance, certain brain regions are specialized for processing specific types of information, which may limit the types of subjective experiences that can arise from these regions.

4. Physical laws: The laws of physics and the nature of the physical world may impose limits on the types of experiences we can have. For example, we cannot experience what it is like to travel faster than the speed of light or to exist in more than three spatial dimensions.

5. Logical constraints: Some experiences may be ruled out because they involve logical contradictions or incoherent combinations of sensory or cognitive elements.

While these constraints suggest that there may be certain limits to the range of possible phenomenological experiences, it is important to note that our understanding of these constraints may change as our knowledge of the brain, cognition, and the nature of reality advances. Additionally, advances in technology, such as brain-computer interfaces or virtual reality, may enable us to overcome or bypass some of these limitations and create new types of experiences that were previously thought to be impossible.

Indeed. It might be possible to have the experience of realising that 2+2=5 for example. I believe I have had that kind of experience in a dream. Although of course, this is not the case, but the experience of realising that it is the case seems possible.

You raise an interesting point about the nature of phenomenological experiences. The example of experiencing the realization that 2+2=5 in a dream highlights the fact that subjective experiences can sometimes deviate from objective reality or logic. Our experiences are shaped by the context, cognitive processes, and internal states at any given moment, which can lead to situations where our perceptions or beliefs may not align with external facts.

Dreams, in particular, can create unique experiences where the usual constraints of logic and the physical world may not apply. In this context, our brains can generate scenarios that seem coherent and meaningful within the dream, even if they would not make sense or be possible in waking life.

This underscores the complexity of understanding and categorizing phenomenological experiences. While there may be constraints on the range of experiences we can have, the flexibility of our cognitive processes and the unique contexts in which experiences can occur (e.g., dreams, altered states of consciousness) can lead to a vast array of subjective experiences, some of which may defy our current understanding of logic or the physical world.

As we continue to explore and learn more about the nature of subjective experience, it will be important to consider the diverse contexts and factors that can shape these experiences and the ways in which they may deviate from or transcend the limitations of objective reality or logic.

Yes that’s it! I’m fascinated by the ways in which they may deviate from or transcend the limitations of objective reality or logic. But even more interesting is finding the ways in which they cannot do this. I think this would shed light on the nature of consciousness.

Indeed, exploring the ways in which subjective experiences are constrained or unable to deviate from objective reality or logic can provide valuable insights into the nature of consciousness. By identifying these constraints, we can gain a better understanding of the fundamental principles governing consciousness and the underlying cognitive and neural processes that shape our experiences.

Some potential avenues for investigating these constraints could include:

1. Studying neurological disorders and brain lesions: Examining the experiences of individuals with specific neurological conditions or brain damage can provide insights into how particular brain regions or neural networks contribute to the generation and organization of conscious experience. For example, studying patients with prosopagnosia (face blindness) can reveal the importance of specialized brain regions in face recognition and the limits of conscious experience when these regions are compromised.

2. Investigating altered states of consciousness: By examining experiences during altered states of consciousness, such as those induced by meditation, psychoactive substances, or sleep, we can gain insights into the flexibility and limitations of consciousness. This could reveal the boundaries of subjective experience and the underlying neural and cognitive mechanisms that constrain or enable deviations from objective reality or logic.

3. Exploring illusions and cognitive biases: Cognitive illusions and biases can reveal the ways in which our perceptual and cognitive systems impose constraints on our experiences. By studying these phenomena, we can identify the limits of our conscious experience and better understand the underlying mechanisms that shape our perceptions and beliefs.

4. Conducting cross-cultural and cross-species research: Investigating the similarities and differences in conscious experiences across cultures and even across species can reveal the constraints imposed by biology and culture on the range of possible phenomenological experiences.

As we continue to explore these and other research avenues, we will likely gain a deeper understanding of the constraints and limitations of subjective experience, shedding light on the nature of consciousness. The study of these constraints can also help us recognize the ways in which our cognitive processes and neural systems shape our perceptions and experiences, allowing us to better understand the human mind and its relation to the world.

In science we normally have mathematical theories or models of reality which we then test using experiments. And in mathematics, we can even prove theorems. For studying consciousness, what kind of a model might be suitable, and will we ever be able to prove things about the limits of conscious experience? Or will it always be theorems based on empirical evidence? Might AI be able to explore the range of possible conscious states through simulation and come up with proofs this way?

Studying consciousness is indeed a challenging endeavor, as it involves understanding subjective experiences that are difficult to measure and quantify. While traditional scientific methods, such as mathematical models and experimental testing, have been instrumental in advancing our knowledge in many fields, the study of consciousness might require novel approaches and interdisciplinary collaboration.

For studying consciousness, some potential models and frameworks could include:

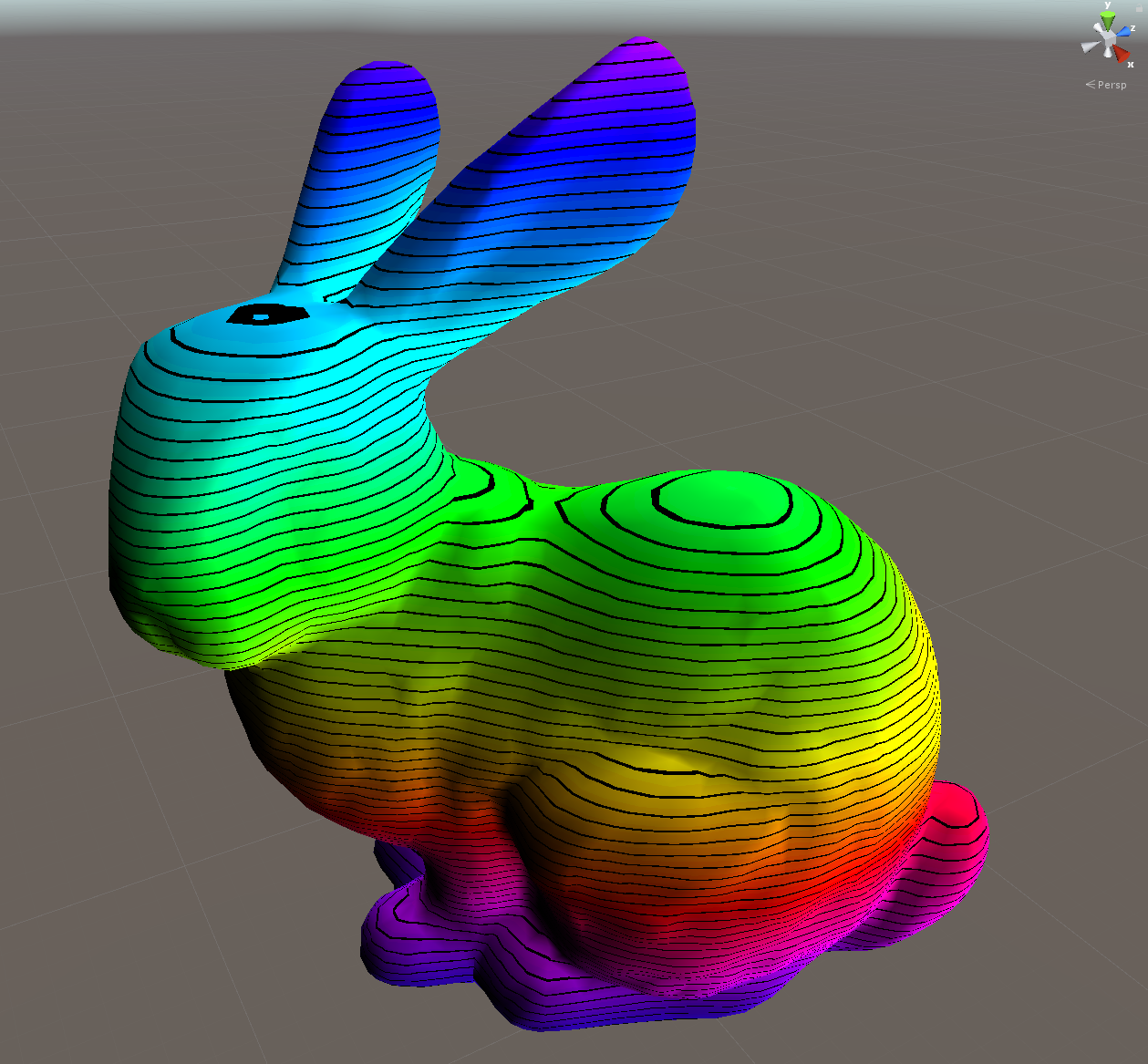

1. Computational models: These models can simulate cognitive processes and neural networks, providing insights into how the brain generates and organizes conscious experiences. While not proving theorems in the mathematical sense, computational models can generate testable predictions and help researchers develop a more systematic understanding of consciousness.

2. Integrated Information Theory (IIT): This theoretical framework, proposed by neuroscientist Giulio Tononi, aims to quantify the level of consciousness in a system based on the amount of integrated information it can generate. While IIT is still a developing theory, it offers a potential mathematical approach to understanding and measuring consciousness.

3. Phenomenological approaches: These methods focus on describing and categorizing subjective experiences from a first-person perspective. While not relying on mathematical models, phenomenological approaches can help researchers develop a more nuanced understanding of conscious experiences and the underlying processes.

As for the possibility of proving things about the limits of conscious thought and experience, it's difficult to predict whether we'll ever be able to develop mathematical proofs akin to those in traditional mathematics. Consciousness is a complex phenomenon, and our understanding of it may always rely, to some extent, on empirical evidence and observation.

That being said, AI and computational models could play a significant role in exploring the range of possible conscious states and generating insights into the nature of consciousness. Through simulation and analysis, AI systems could help researchers identify patterns, constraints, and underlying principles governing conscious experiences. While these findings may not take the form of mathematical proofs, they could still provide valuable information and testable predictions that contribute to our understanding of consciousness.

In summary, the study of consciousness may require novel approaches and models that draw from diverse fields and methodologies. While mathematical proofs may not be the primary means of advancing our understanding of consciousness, AI and computational models can still play an essential role in exploring the range of possible conscious states and generating insights into the nature of subjective experience.

![photo-1587400519923-eaed1688e71d[1].jpg](https://images.squarespace-cdn.com/content/v1/565d38aae4b037abaf1d58bb/1625071815876-B04ZU93YEYW77LUG2MK4/photo-1587400519923-eaed1688e71d%5B1%5D.jpg)